PART 1: Local government and You

Upon being given the cultural probes kit, Sunah and I proceeded to pass it off to our subjects of choice, giving them time for them to coordinate with us and complete the kit.

When completing the kit, we discovered that the participants were rather antsy when it came to completing them. The activities themselves were received as repetitive and seemed to be intended for a different age demographic.

Participant 2 was very vocal about how she disliked how unclear some of the exercises were, in that when she answered the questions she wasn't sure how it related to the subject matter thus unsure in her answer. This subjective approach to attaining research can sometimes, as we found out, be difficult to interpret both while collecting data and during analysis. The participants also found it repetitive and challenging to work with.

After running through the probe kit, we met up again and reflected on the response to the kit. One of our participants liked the animal component while the other didn't so we decided to run with the "subjective association" approach, but wanted to make the data simpler to understand. One of the key issues we discovered is that the vagueness of the probe kit made it difficult to engage the participants.

reflections on the first part of the probe kit (pink=negative, blue=positive)

PART 2: Our own probe

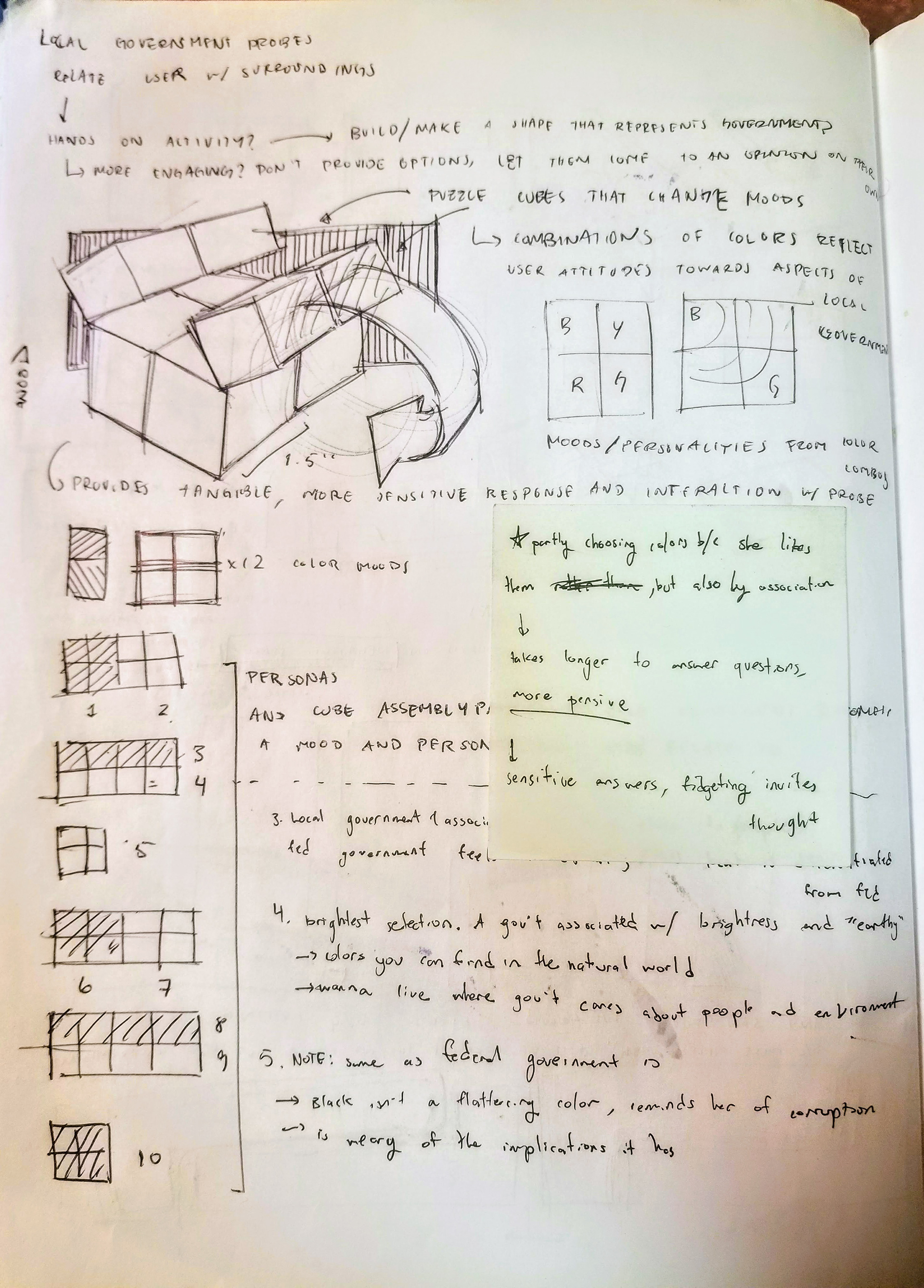

After receiving feedback on the first part of the probe, we approached the second part of the assignment carefully. We didn't quite like the vague quality of the first part where you are left comparing seemingly unrelated things. In addition, we thought that it was very easy to breeze through the entire kit. This led us into the direction of wanting to create a more engaging component in the kit.

As we reflected on what worked and didn't in the past, we developed a "fidget" probe that could potentially encourage more sensitive answers to questions. Having a physical component could engage the participant more and perhaps inform a more pensive attitude towards the kit itself.

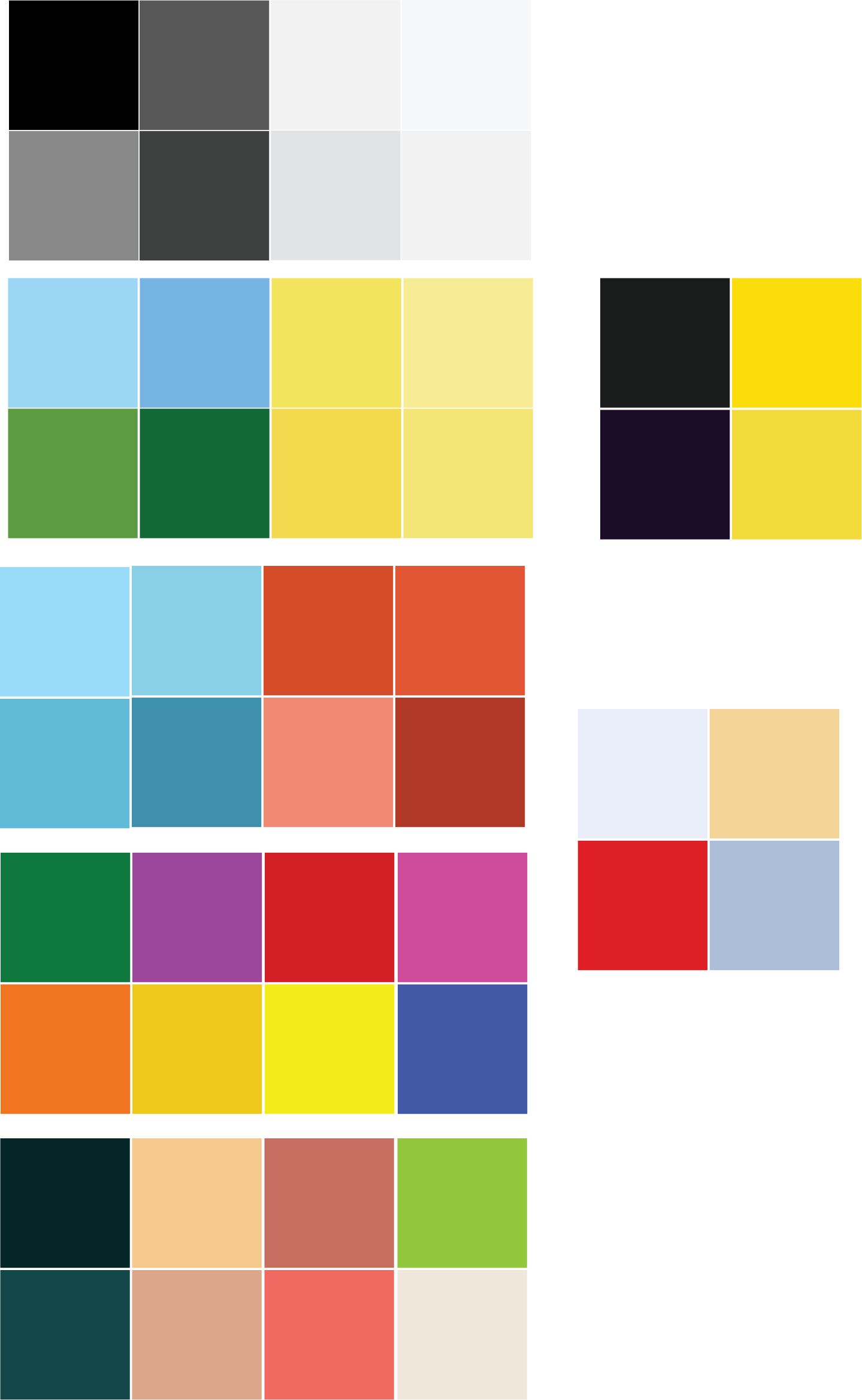

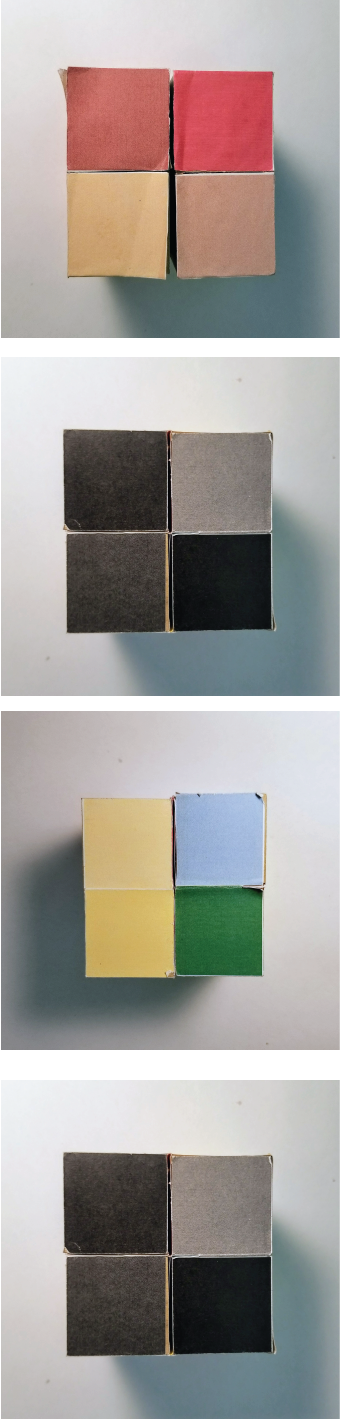

We decided to try and make a transformable puzzle cube that our participants could interact with while answering our questions. We figured it could be more entertaining as well as personal than simply filling out a packet. We created a dozen color schemes with their relevant moods to put on the cube, then got to work to machine the cube.

We cut it out of some scrap beechwood and ran it through the table saw to get eight identical cubes. Unfortunately for us, we grossly under-estimated how easily we would be able to assemble this cube, but after several hours of printing, cutting, and gluing, we finally assembled our probe.

The following day, we were able to coordinate with our participants and ask them a new set of questions. Rather than match the question to unclear subjective values, we provided these color "moods" as options in hopes that we'd be able to collect more understandable data.

Questions:

What color palette comes to mind when you think of your local government? why?

What color palette comes to mind when you think of government in general? why?

If it is different from that of your local government, why so?

What color palette would you most like your government to be like? why?

What color palette would you least like your government to be like? why?

Process of developing the cube as well as Participant

Participant interacting with probe as well as participant 2's results

Reflections on the additional probe:

Participant 2 said she liked how tangible it was and that she got to play with it. However, she also commented that it was easier to generate her own palette like in the first exercise. From this, we can conclude that even tho it seemed simpler to give the participant options, it ended up feeling a bit restricting. From a research analysis standpoint, giving the participant options is significantly easier to deal with than participant generated answers. Already, the comparison of local government to colors is pretty subjective, by breaking it down into choices of moods it would be easier for data to be analyzed and presented.

Another piece of feedback we got was that it was difficult to handle due to it being so fragile. This is fair and really a commentary on our craft rather than our research method

In conclusion, there were positives and negatives to both the kit and our addition to it. We agree that there is still alot of development and improvements that need to be made for this kit to be a valid research tool. It's difficult to tell if any of the results from the current form of this kit would be helpful in anyway. We discussed that if there was a more targeted question, the kit could be catered for it rather than remaining vague.